nova 创建虚机时的调度解析

基本概念

调度器

1、调度器:调度 Instance 在哪一个 Host 上运行的方式。目前 Nova 中实现的调度器方式由下列几种:

ChanceScheduler(随机调度器):从所有正常运行nova-compute服务的Host Node中随机选取来创建InstanceFilterScheduler(过滤调度器):根据指定的过滤条件以及权重来挑选最佳创建Instance的Host NodeCaching(缓存调度器):是FilterScheduler中的一种,在其基础上将Host资源信息缓存到本地的内存中,然后通过后台的定时任务从数据库中获取最新的Host资源信息。

2、为了便于扩展,Nova 将一个调度器必须要实现的接口提取出来成为 nova.scheduler.driver.Scheduler,只要继承了该类并实现其中的接口,我们就可以自定义调度器。

3、注意:不同的调度器并不能共存,需要在 /etc/nova/nova.conf 中的选项指定使用哪一个调度器。默认为 FilterScheduler 。

1 | |

过滤调度器

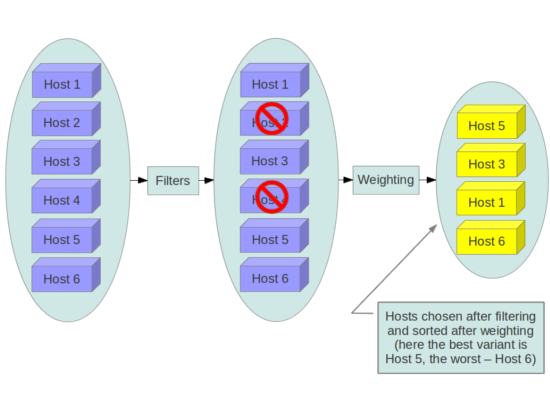

1、FilterScheduler 首先使用指定的 Filters(过滤器) 过滤符合条件的 Host,然后对得到的 Host 列表计算 Weighting 权重并排序,获得最佳的 Host 。

2、过滤调度器主要由多个过滤器组成:过滤器都在 nova.scheduler.filters 路径下

代码架构图

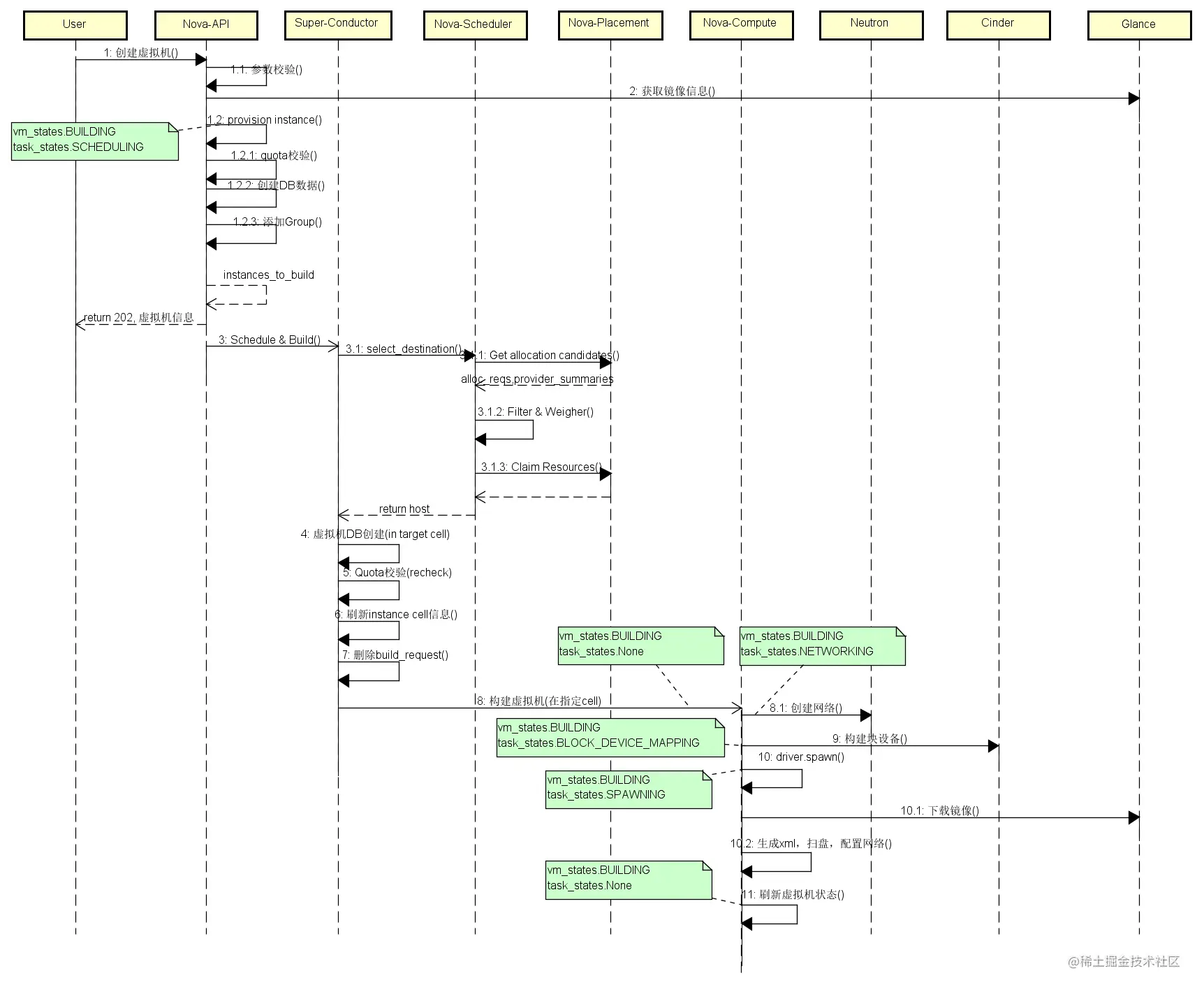

创建虚拟机时序图

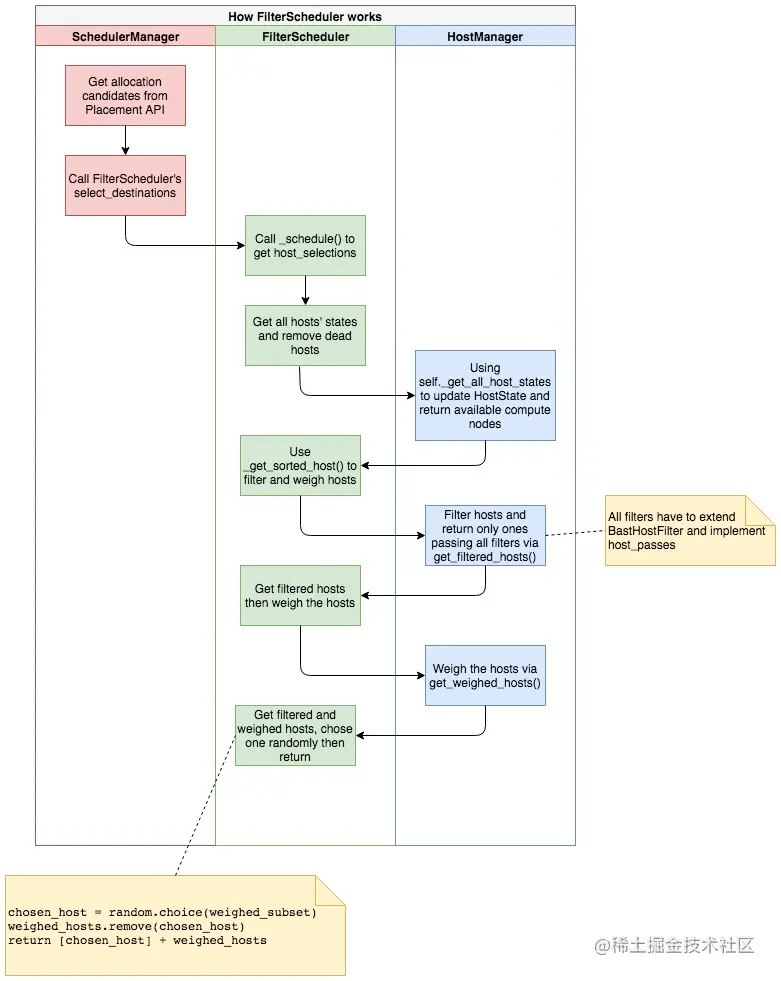

调度器工作时序图

调度器工作的时序图如下:

从日志层面解析调度流程

1、起点 nova.scheduler.manager::SchedulerManager:select_destinations

1 | |

2、请求Placement API获取候选节点

1 | |

3、nova.scheduler.filter_scheduler::FilterScheduler:_schedule 调度器的主逻辑

4、nova.scheduler.filter_scheduler::FilterScheduler:_get_all_host_states 查询compute_nodes 并更新 host_state

1 | |

5、nova.scheduler.filter_scheduler::FilterScheduler:_get_sorted_hosts 执行每一个过滤器,过滤节点

1 | |

6、nova.scheduler.utils:claim_resources 调用 Placement API 去 claim resource.

1 | |

从源码层面解析调度流程

阶段一、请求流转

- 调用链:

nova-conductor ==> RPC scheduler_client.select_destinations() ==> nova-sechduler - 发起方

nova-conductor的函数调用链如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18#nova.conductor.manager.ComputeTaskManager:build_instances()

def build_instances(self, context, instances, image, filter_properties,

admin_password, injected_files, requested_networks,

security_groups, block_device_mapping=None, legacy_bdm=True,

request_spec=None, host_lists=None):

# get appropriate hosts for the request.

host_lists = self._schedule_instances(context, spec_obj, instance_uuids, return_alternates=True)

def _schedule_instances(self, context, request_spec,

instance_uuids=None, return_alternates=False):

scheduler_utils.setup_instance_group(context, request_spec)

host_lists = self.scheduler_client.select_destinations(context,

request_spec, instance_uuids, return_objects=True,

return_alternates=return_alternates)

return host_lists - RPC scheduler_client.select_destinations() 函数调用链如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22# nova.scheduler.client.query.SchedulerQueryClient:select_destinations()

from nova.scheduler import rpcapi as scheduler_rpcapi

class SchedulerQueryClient(object):

"""Client class for querying to the scheduler."""

def __init__(self):

self.scheduler_rpcapi = scheduler_rpcapi.SchedulerAPI()

def select_destinations(self, context, spec_obj, instance_uuids,

return_objects=False, return_alternates=False):

LOG.debug("SchedulerQueryClient starts to make a RPC call.")

return self.scheduler_rpcapi.select_destinations(context, spec_obj,

instance_uuids, return_objects, return_alternates)

# nova.scheduler.rpcapi.SchedulerAPI:select_destinations()

def select_destinations(self, ctxt, request_spec, filter_properties):

cctxt = self.client.prepare(version='4.5')

return cctxt.call(ctxt, 'select_destinations', **msg_args)阶段二:RPC 请求到达 nova-scheduler 服务 SchedulerManager

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29# nova.scheduler.manager.SchedulerManager:select_destinations()

class SchedulerManager(manager.Manager):

"""Chooses a host to run instances on."""

target = messaging.Target(version='4.5')

def __init__(self, scheduler_driver=None, *args, **kwargs):

if not scheduler_driver:

scheduler_driver = CONF.scheduler_driver

# 可以看出这里的 driver 是通过配置文件中的选项值指定的类来返回的对象 EG.nova.scheduler.filter_scheduler.FilterScheduler

self.driver = driver.DriverManager(

"nova.scheduler.driver",

scheduler_driver,

invoke_on_load=True).driver

super(SchedulerManager, self).__init__(service_name='scheduler',

*args, **kwargs)

def select_destinations(self, ctxt, request_spec=None,

filter_properties=None, spec_obj=_sentinel, instance_uuids=None,

return_objects=False, return_alternates=False):

# get allocation candidates from nova placement api 获取候选节点

res = self.placement_client.get_allocation_candidates(ctxt,resources)

(alloc_reqs, provider_summaries,allocation_request_version) = res

selections = self.driver.select_destinations(ctxt, spec_obj,

instance_uuids, alloc_reqs_by_rp_uuid, provider_summaries,

allocation_request_version, return_alternates) - 关键函数:

self.placement_client.get_allocation_candidates(ctxt,resources)调用nova-placement api获得候选节点阶段三:从 SchedulerManager 到调度器 FilterScheduler

宏观流程

1 | |

微观两个重要的函数

_get_all_host_states:获取所有的 Host 状态,并且将初步满足条件的 Hosts 过滤出来。_get_sorted_hosts:使用 Filters 过滤器将第一个函数返回的 hosts 进行再一次过滤,然后通过 Weighed 选取最优 Host。

_get_all_host_states 函数

host_manager是nova.scheduler.driver.Scheduler的成员变量1

2

3

4

5

6

7

8

9

10

11# nova.scheduler.driver.Scheduler:__init__()

# nova.scheduler.filter_scheduler.FilterScheduler 继承了 nova.scheduler.driver.Scheduler

class Scheduler(object):

"""The base class that all Scheduler classes should inherit from."""

def __init__(self):

# 从这里知道 host_manager 会根据配置文件动态导入

self.host_manager = importutils.import_object(

CONF.scheduler_host_manager)

self.servicegroup_api = servicegroup.API()_get_all_host_states调用成员变量host_manager的函数如下:1

2

3

4

5def _get_all_host_states(self, context, spec_obj, provider_summaries):

compute_uuids = None

if provider_summaries is not None:

compute_uuids = list(provider_summaries.keys())

return self.host_manager.get_host_states_by_uuids(context,compute_uuids,spec_obj)-

host_manager的get_host_states_by_uuids函数如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19def get_host_states_by_uuids(self, context, compute_uuids, spec_obj):

self._load_cells(context)

if (spec_obj and 'requested_destination' in spec_obj and

spec_obj.requested_destination and

'cell' in spec_obj.requested_destination):

only_cell = spec_obj.requested_destination.cell

else:

only_cell = None

if only_cell:

cells = [only_cell]

else:

cells = self.cells

# 查询 compute_nodes 信息,然后作为参数继续调用

compute_nodes, services = self._get_computes_for_cells(

context, cells, compute_uuids=compute_uuids)

return self._get_host_states(context, compute_nodes, services) _get_host_states函数如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38def _get_host_states(self, context, compute_nodes, services):

"""Returns a generator over HostStates given a list of computes.

Also updates the HostStates internal mapping for the HostManager.

"""

# Get resource usage across the available compute nodes:

host_state_map = {}

seen_nodes = set()

for cell_uuid, computes in compute_nodes.items():

for compute in computes:

service = services.get(compute.host)

if not service:

LOG.warning(_LW(

"No compute service record found for host %(host)s"),

{'host': compute.host})

continue

host = compute.host

node = compute.hypervisor_hostname

state_key = (host, node)

host_state = host_state_map.get(state_key)

if not host_state:

host_state = self.host_state_cls(host, node,

cell_uuid,

compute=compute)

host_state_map[state_key] = host_state

# We force to update the aggregates info each time a

# new request comes in, because some changes on the

# aggregates could have been happening after setting

# this field for the first time

host_state.update(compute,

dict(service),

self._get_aggregates_info(host),

self._get_instance_info(context, compute))

seen_nodes.add(state_key)

return (host_state_map[host] for host in seen_nodes)- 总结:

_get_all_host_states函数主要的作用就是查询 compute_nodes 信息(从数据库)。然后存储在HostState类中

_get_sorted_hosts 函数

1 | |

调度涉及的其他服务

nova placement api

1、Get allocation candidates

- 接口描述:选取资源分配候选者

- 接口地址:GET /placement/allocation_candidates

- 请求参数:resources=DISK_GB:1,MEMORY_MB:512,VCPU:1

- 请求示例:

1

curl -H "x-auth-token:$(openstack token issue | awk '/ id /{print $(NF-1)}')" -H 'OpenStack-API-Version: placement 1.17' 'http://nova-placement-api.cty.os:10013/allocation_candidates?limit=1000&resources=INSTANCE:10,MEMORY_MB:4096,VCPU:4' | python -m json.tool - 响应示例:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36{

"provider_summaries": {

"4cae2ef8-30eb-4571-80c3-3289e86bd65c": {

"resources": {

"VCPU": {

"used": 2,

"capacity": 64

},

"MEMORY_MB": {

"used": 1024,

"capacity": 11374

},

"DISK_GB": {

"used": 2,

"capacity": 49

}

}

}

},

"allocation_requests": [

{

"allocations": [

{

"resource_provider": {

"uuid": "4cae2ef8-30eb-4571-80c3-3289e86bd65c"

},

"resources": {

"VCPU": 1,

"MEMORY_MB": 512,

"DISK_GB": 1

}

}

]

}

]

}2、Claim Resources

- 接口描述:分配资源

- 接口地址:/allocations/{consumer_uuid}

- 请求参数: POST

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19{

"allocations": {

"4e061c03-611e-4caa-bf26-999dcff4284e": {

"resources": {

"DISK_GB": 20

}

},

"89873422-1373-46e5-b467-f0c5e6acf08f": {

"resources": {

"MEMORY_MB": 1024,

"VCPU": 1

}

}

},

"consumer_generation": 1,

"user_id": "66cb2f29-c86d-47c3-8af5-69ae7b778c70",

"project_id": "42a32c07-3eeb-4401-9373-68a8cdca6784",

"consumer_type": "INSTANCE"

} - 响应示例:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19{

"allocations": {

"4e061c03-611e-4caa-bf26-999dcff4284e": {

"resources": {

"DISK_GB": 20

}

},

"89873422-1373-46e5-b467-f0c5e6acf08f": {

"resources": {

"MEMORY_MB": 1024,

"VCPU": 1

}

}

},

"consumer_generation": 1,

"user_id": "66cb2f29-c86d-47c3-8af5-69ae7b778c70",

"project_id": "42a32c07-3eeb-4401-9373-68a8cdca6784",

"consumer_type": "INSTANCE"

}调度涉及的数据库模型